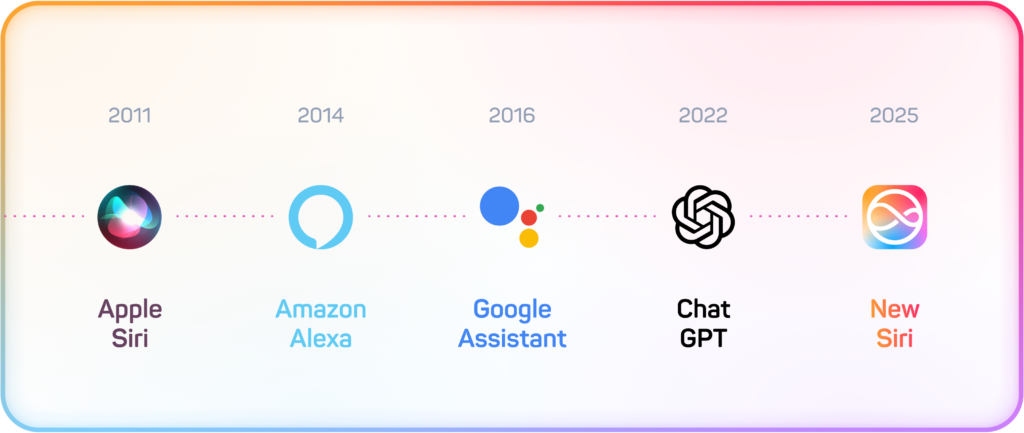

Siri, Google Assistant and ChatGPT have all promised to revolutionise how we interact with our devices, but they either don't understand us or can't realistically do anything. With the advent of Apple Intelligence and the next generation of Siri, however, we are on the cusp of change - the era of handy digital assistants that understand and act could finally be upon us. What does this mean for app developers and the future of user interfaces?

From ChatGPT to Apple Intelligence: A new era of AI assistants in our smartphones

Big promises, small results

Artificial intelligence and its capabilities are a significant topic of technology discussion today. Despite all the advances in text generation and conversational capabilities, our digital assistants remain surprisingly limited. While ChatGPT or Claude can have sophisticated conversations and, more recently, manage tasks (ChatGPT), these remain enclosed in their environment.

For all their “intelligence”, these assistants lack the most important thing – the ability to act in the world around us. They can’t access data on our devices, launch apps or perform complex tasks across the system for us. More than actual assistants, they’re more like competent advisors who can give us advice but rarely do anything for us.

Key limitations of current AI assistants

Lack of context and personalisation

The main problem with today’s assistants is the lack of context. They know virtually nothing about the user or their device, so they must constantly explain and refine requirements. Unlike real human conversation, where we subconsciously adapt communication to the other person’s knowledge and situation, AI assistants start every interaction almost from scratch. The result is more challenging, slower, and less intuitive communication.

The inability to act

Today’s assistants can understand what we want them to do but can’t do much alone. ChatGPT can compose a great email for you, but you must send it yourself. This fundamental limitation stems from a lack of integration with operating systems and applications. Assistants are often isolated in separate ecosystems without direct access to the required features to complete tasks.

Language barrier

While today’s assistants are proficient in many languages, their abilities vary dramatically depending on how widely spoken the language is. The quality of understanding and responses generated for globally underrepresented languages, such as English, tends to be lower. For Czech users, this means they either have to communicate in English or settle for more limited functionality.

Privacy and connection dependency

Most advanced AI assistants operate in the cloud, bringing two significant problems. First, they require a stable internet connection, which limits their usability in some situations. Second, we send all of our data to third parties who we must trust to handle our information responsibly. This aspect poses a significant obstacle for users who value their privacy.

Apple Intelligence and the new era of AI assistants

An ambitious vision for integration

The basic capabilities of AI models are unlikely to improve dramatically anytime soon. However, their integration into devices and software is evolving rapidly. We are seeing efforts to standardise through protocols such as MCP (Model Context Protocol) to make data available to device assistants and enable them to perform various actions. However, the success of these initiatives depends on the willingness of developers and operating system designers to engage in them.

Apple Intelligence in practice: specific use cases

What will the new Siri do? Let’s take a look at some practical examples that illustrate how a truly connected assistant can simplify everyday tasks:

- Working with content across apps: Users can enter a command like “Take the photo Michael sent me yesterday on iMessage and email it to Peter.” The assistant can find the correct photo in the conversation history, open the email app, create a new message for the correct recipient, and attach the selected file.

- Quickly organise information: Commands like “Paste this photo into a new note” or “Create a calendar event from this email invitation” will be executed without manually switching between apps and copy content.

- Contextual search: The user can ask for information related to what they have on their device, such as “When was the last time I edited a presentation for client XYZ?” or “Find all documents that mention Project Alpha”.

- Complex tasks: Siri can also handle more complex sequences of commands, such as “Summarize my last three email conversations with Jana and create tasks from them in my to-do list” or “Schedule a meeting with the team for next week when we’re all free and notify them by email.”

Unlike today’s assistants, who often respond with “I can’t do that”, the next generation of AI assistants will be able to perform these tasks, thanks to deeper integration with the operating system and apps.

Local processing is a key advantage

Over the past year, Apple has gradually updated its devices and increased its memory size to allow language models to run directly on the device without needing an internet connection. While the performance of these local models cannot compete with large cloud-based solutions like ChatGPT, it should be sufficient for everyday conversation and performing a range of actions in collaboration with third-party apps. In doing so, Apple elegantly solves both the privacy and connectivity problems.

Challenges and delays

The recent announcement that the new version of Siri will be delayed suggests that the implementation will not be without complications. The currently published list of supported actions is limited and focuses primarily on system functions such as working with images or emails. Question marks also remain over support for various languages, including English. However, with each new operating system version, we can expect to expand functionality and improve performance.

Implications and Future Outlook

Transforming the user experience

If the new Siri works reliably, it could fundamentally change how we use our devices. Even for users who today refuse to talk to their phone or computer, voice control may become the natural choice due to its simplicity and intuitiveness. However, it can benefit users with visual or motor limitations for whom an assistant can make previously difficult-to-use functions accessible.

Practical use in everyday life

Imagine an assistant that can answer questions and, for example, use a camera to describe what is in front of a user with a visual impairment, read information, or perform complex actions across applications. Such an assistant could also be invaluable for professionals working in the field with hands full of tools, drivers behind the wheel, or anyone in a situation where the manual operation of a device is not practical.

Competitive responses and future developments

Apple has the clearest vision of the path to a handy, intelligent, capable assistant with its new Siri concept. The question is how competitors will react. Companies such as OpenAI (ChatGPT) or Anthropic (Claude) face obstacles in the form of limited control over the integration of their solutions into third-party operating systems and applications. Anthropic is trying to address this problem with its MCP initiative, but its success depends on its adoption by other developers.

Technology giants like Microsoft or Google are better positioned to introduce new capabilities directly into their operating systems and incentivise developers to use them. In the coming years, we can expect intense competition to see who can offer the most beneficial digital assistant capable of understanding and acting.

On the threshold of a new era

We are beginning a new chapter in the history of digital assistants. The transition from intelligent conversational bots to assistants who can act represents a significant qualitative leap. Apple Intelligence and the new Siri may be the first glimmers of a change that will transform how we interact with technology in the long term.

New opportunities for app developers

As developers at eMan, we see the arrival of the next generation of AI assistants as a significant opportunity for innovation. Our teams are already exploring ways to integrate support for systems like Apple Intelligence into our clients’ applications.

For example, imagine an enterprise project management application that can automatically build a report based on the voice prompt “Create a report on the status of XYZ project and send it to the entire team”, include key metrics, and distribute it to relevant stakeholders. Or a corporate communications tool that, when instructed to “Summarize all important news from the past week,” will analyse and create a structured report.

Are you ready for the era of handy AI assistants? At eMan, we’re at the forefront of these trends, helping our clients prepare their applications for the future of AI interaction. Contact us, and we’ll be happy to help you integrate AI assistant support into your existing or new applications.